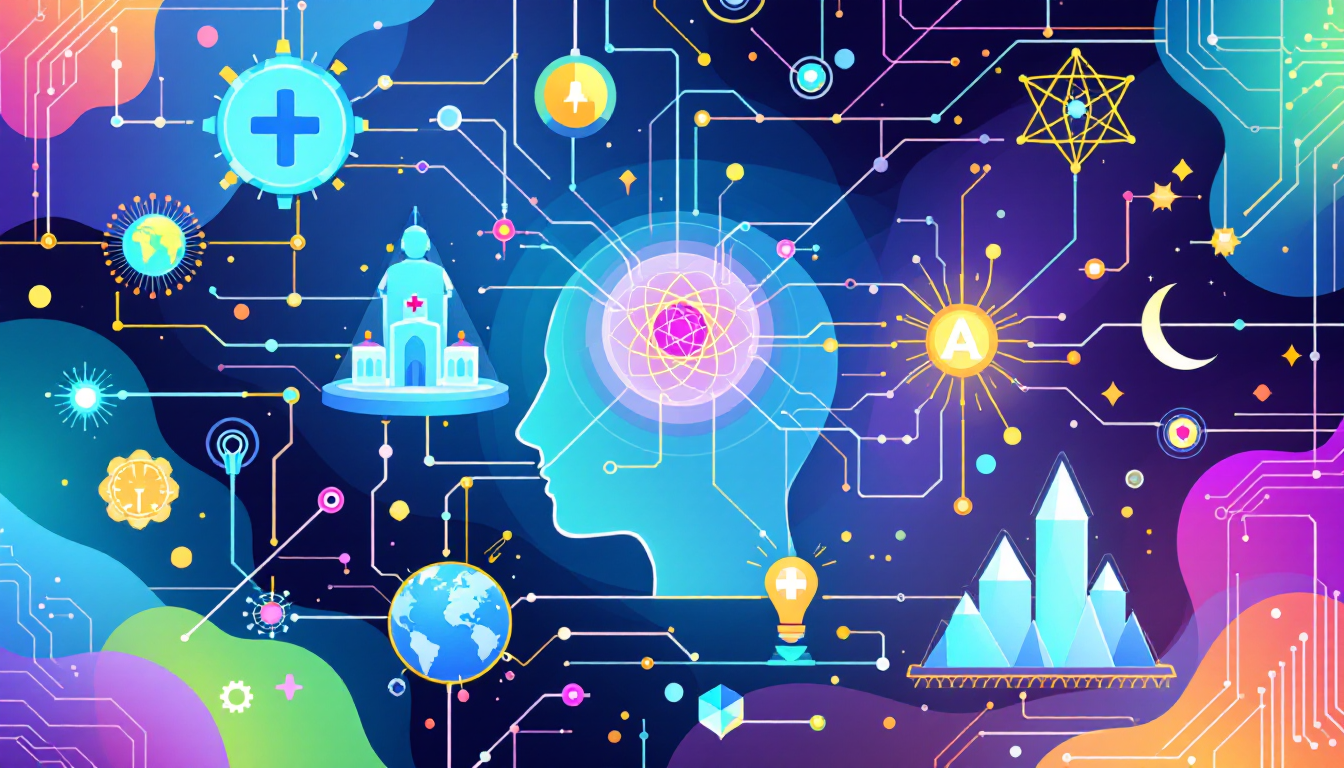

Top AI Innovations: Healthcare, Quantum, Ethics & Sustainability in Focus

Explore this week’s breakthrough AI developments—from transformative healthcare diagnostics and quantum computing advances to ethical AI initiatives and sustainable tech trends—reshaping our digital future.